Mechanical- and Content quality

Maintain quality with tools to find and fix mechanical errors in your modeling

I’m currently working with a client who is capturing a load of project knowledge into a single (EA) repository, pulling the skills & wisdom of 20-30 IT experts into one place.

Before they started doing this, they split up the problem into bits, so that each modeller could work semi-independently of the others, and not have everyone trip over everyone else. And this worked well for quite a while. They even had a small part of the model for obviously re-usable stuff, such as master lists of Actors and Components.

Now they are starting to put the bits together, and some other problems are emerging. To help them to make their model more consistent, and easier for other people to understand and re-use, I’ve been creating a family of tools & techniques to help them.

We’ve chosen to put the errors in the model into two broad categories:

- “Mechanical” errors, which can be fixed by applying some simple (ish) rules, with minimal human involvement and bags of automation. Mechanical errors are self-defining: they are the kinds of errors which can be found by mechanical means.

- “Content” errors, are where, even though the ‘mechanics’ are correct, the model is still wrong. These can’t be fixed – or even detected – by mechanical means. Another human needs to look at the content and think hard.

The remainder of this post is about the mechanical errors, and talks about using some new techniques we’ve used to find and fix the problems for users of Sparx Enterprise Architect.

Content Quality

Before we look at how we can ensure Mechanical quality in our models, a few words about the other side of the coin – content quality.

This is the kind of quality that it’s currently hard, or impossible, to check using any kind of automation. Even an AI would find it hard to check that a requirement which says ‘All wibbles shall be blue‘ is correct. We can only ask the originator of the requirement what color they want their wibbles to be.

Achiving and maintining content quality is about making sure that those prople who know what the requirement should look like have had a chance to review it. And that probably isn’t by asking them to review a 500 page document over the weekend. It’s the result of carefully thought out programme of engagement with the knowledge holder, maybe in workshops, or by small, readable, well-presented documents, or by giving them the information in the form of a Prolaborate website.

In all cases, if your model is mechanically correct, then you’re still only part way to a good quality model. Content quality is critical. Mechanical quality is important too. And mechanical quality can be checked quick and easily, so there’s no need to spend time on it. Much better to concentrate on content.

That’s all about content quality – the rest of this is about making the checking of mechanical quality as painless as possible.

Deciding what it's supposed to look like

Finding mechanical errors is about deciding what you think a model should look like, then checking to see if the model looks that way.

There are some things we need to describe when defining what a model should look like:

- Which types of element you should use (e.g. ‘Requirement’, ‘User Story’, ‘Stakeholder’)

- Which stereotypes of those elements you should use (if any) e.g. you might decide to have <<Functional>> and <<Non function>>Requirements

- Which links you allow between those elements/stereotypes, and what those links mean

- Which of the built-in or additional attributes you’re going to use (in EA, additional attributes are called Tagged Values). For example, you might decide that each ‘User Story’ has an attribute called ‘Story points’, which, in EA, needs to be a Tagged Value

- What kinds of diagrams you are going to draw, and what they should/may/must contain

A description of this is called a meta-model: a model of what a model should or does look like. Some people call this an ontology, which is a shame, because even most first-language English speakers don’t know the word, so I won’t use it again. But’s it’s in the article now, in case some smart person Googles it.

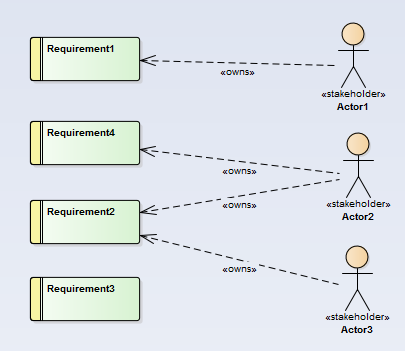

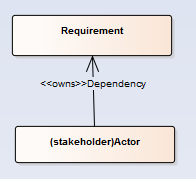

Your model may be very large, but may still have a simple meta-model. For example, if your model contains 100s of Stakeholders and 1000s of Requirements –

then your meta-model is really easy:

This says you’re only allowed two kinds of ‘thing’, and one kind of link between them.

But in reality, meta-models are much more complicated. The one I’m working on at the moment – a blend of Archimate, UML, BPMN and some bespoke stuff – has just over 100 types of element, and a few 100s of possible types of link between them.

And that’s just what it’s supposed to look like: when we look at what individual modelers have done, there are quite a few more, which shouldn’t be here.

Quality checking against a Reference Model

You might say at this point that we should have decided what kinds of ‘things’ our model should contain before we started. This is excellent advice, but sadly, at the start of the project, we didn’t know what we’d need, so the meta-model has evolved very quickly.

This model of ‘what the model is supposed to look like’ we have called a ‘Reference Model’. If the meta-model which we see in our day-to-day model is the same as the Reference model, then we have no (mechanical) issues.

Model Expert is a set of tools which allow a day-to-day model to be analyzed and fixed, by comparing it to a Reference model.

What we do is:

- Analyze the day-to-day model to see what it’s reference model is like: it’s meta-model

- Compare that meta-model to the reference meta-model, to see where it differs: these are flagged as issues, or ‘violations’

- Finally, once we have identified the issues, fix them.

See the Help for more details

More Insights

A model without validation is just some pictures

22 June 2023

Why you need your models to be validated.

Learn MoreUsing Derived Connectors to simplify your models

7 June 2023

..and make them more valuable

Learn MoreTop 11 model quality tips for Sparx EA modelers

3 November 2022

Discover new insights into your model, and spot and resolve problems.

Learn MoreHow to get your EA models ready for Prolaborate

6 July 2022

To make the most of Prolaborate it's a good idea to start by doing some housekeeping

Learn MoreStep by step to a tailored UI for Enterprise Architect

28 March 2022

The 'out-of-the-box' EA element properties window can be intimidating for a new user, and it's easy to get overwhelmed, miss critical data, or more...

Learn MoreOnly Connect - Exploring traceability in Sparx EA models

1 November 2021

This webinar looks at the importance of consistency to get value from traceability.

Learn MoreWebinar - How to improve model quality and consistency

16 June 2020

This webinar from the EA Global Summit 2020 provides hints and tips for managing models as they grow.

Learn MoreHard and Soft

11 October 2017

No, not about Brexit… More about styles of Business Analyst.

Learn MoreModel curation techniques for EA

6 September 2016

How cleaning, navigating and validating your EA model makes sharing and collaborating much more effective.

Learn More